Unit 6: Priority queues

1 The Priority Queue ADT

This unit is about a new ADT called the Priority Queue, and by far the most common data structure used to implement this ADT which is called a heap. As we will see, priority queues combine the simplicity of stack and heap ADTs, with some ideas of tree-based data structures that we saw with ordered maps.

Recall that the Stack and Queue ADTs are both “linear” data structures which support insertion, peeking, and removal — but not arbitrary lookups in the middle. The difference between them is the order that removals happen; queues are FIFO and stacks are LIFO.

Priority Queues are similar in that we can insert, peek, and remove single items, but the removal ordering is different. Instead of being based on the order in which things were inserted, PQ removal depends on the ordering of the elements themselves. In a Maximum Priority Queue, the only element we can peek at or remove is the largest that has been inserted so far. Specifically, a Max PQ has these four methods:

add(element): Insert a new element (which must beComparable)peekMax(): Return (but don’t remove) the largest elementremoveMax(): Return and remove the largest elementsize()

As you might guess, there is also something called a Minimum Priority

Queue, which is exactly the same except it has peekMin() and

removeMin() instead. It’s easy to find applications of both kinds of

priority queues, depending on what you want to do, and everything works

exactly the same either way. Just remember that in a Priority Queue you

can normally just do one or the other (remove/peek at the min or max,

but not both).

Java note: The

PriorityQueueclass in Java is a minimum priority queue. The equivalent methods topeekMin()andremoveMin()are called simplypeekandpoll. We are using the names likepeekMin()orpeekMax()etc. just so that it’s easier to remember what kind of PQ we are dealing with.

In practice, in Java it’s enough to only have one kind of PQ

implementation (min or max), because we can easily change the

comparison operator (Comparable interface in Java)

to work however we like. It’s also common to store priorities and values

together in a PQ, which is also easy to do if you use something like the

key-value pair class we looked at in the previous unit.

1.1 Implementations with what we know so far

Of course we are going to learn about a new awesome data structure that’s absolutely perfect for implementing a PQ efficiently. But as usual, it’s useful to think about how we can use things we already know about to get the job done.

Unsorted Array/Linked List: Here insertion would just mean adding at the beginning or end, and takes constant time (well, amortized constant-time for an unsorted array). But the removeMax() operation would be O(n), as you’d have to iterate along every element in the PQ to find the maximum, and then remove it. And

peekMax()would have the same linear-time cost asremoveMax().Sorted Array/Linked List: The tradeoff here is the opposite: we get fast

peekMax()andremoveMax()since the largest thing would always be at the end of the array or linked list. But theadd(e)operation would be O(n), as you have to find the spot in the sorted order and (in the case of arrays) make room by shifting al the elements around.Balanced Search Trees: Using a balanced search tree data structure such as AVL or 2-3-4 trees actually gets the job done somewhat-reasonably here. Insertion of course is \(O(\log n)\). To do

peekMax()is also \(O(\log n)\)since we just have to go to the rightmost node in the tree, andremoveMax()` has the same big-O cost of \(O(\log n)\).But using a balanced search tree here is kind of overkill. These trees are complicated and their implementations are tricky, in order to support quickly looking up anything in the tree, not just the largest element. If only we had some kind of balanced tree that was organized according to the maximum rather than the sorted order…

2 Heaps

We’re going to use something called a Binary Heap (this is usually the type of heap people mean when they just say “heap”). Just as a BST is a type of binary tree with the data organized in a certain way, a Heap is a type of binary tree with the data organized in a different way.

Heaps are really good at being PQs, to the point that using much else would be a little weird. As a result, heaps and Priority Queues are often mixed up by people who haven’t learned the difference between an ADT and a data structure. PQ is the ADT. Heap is the data structure.

Heaps are binary trees with two extra rules:

The priority of a parent is greater than or equal to the priority of its children. We know nothing about the comparative priorities of the left and right children. We only know that the parent is of higher priority than the children.

The tree is complete, meaning it is as short as possible. Furthermore, on the last level of the tree, all the leaves must be all the way over to the left.

Notice crucially that we can have multiple nodes with the same priority in the heap, unlike Maps where we usually only want one instance of each key in the data structure.

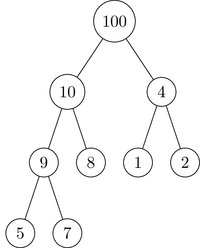

Here’s an example of a heap, which follows all the rules above:

3 Fixing a Heap

Imagine you have a heap in every respect, except that just one of the nodes violates the heap order property. How would you fix it?

Fortunately, there is an easy way to fix the heap order property without changing the structure (the completeness property): If the node is bigger than its parent, swap ’em and keep swapping on up until the parent is bigger than that node. That’s called a “bubble up” operation.

The other option is that the node is smaller than one of its children. Then you take whichever child is larger, and swap that with the parent, then keep doing that on down the heap until the node’s children are smaller than it is. That’s called a “bubble down” operation.

And that’s it! We can fix the heap ordering of any single element by either doing a bubble down or a bubble up. And it’s pretty easy to see that both of those are going to be \(O(height)\) time operations, which in a heap is always \(O(\log n)\) since heaps are complete. The bubble down and bubble up are what allow you to…

4 Insert

Inserting for the add() method is pretty simple.

Place the new data in the next available

spot in the tree (in the above heap, this is the left child of 8). Now,

we perform a “bubble up,” by running the following loop:

While the new element is not at the root and is larger than its parent:

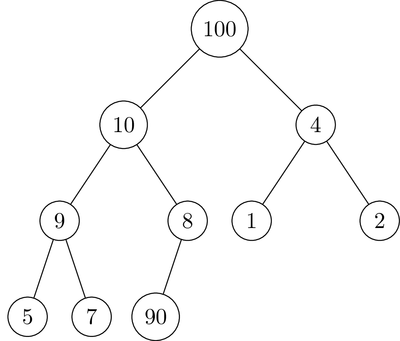

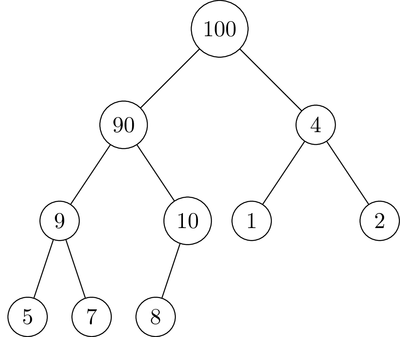

Swap the new element with its parentLet’s take the above tree, and add an element with priority 90. We would go through the following steps:

Add the data to the first open slot in the heap.

90 > 8, so swap!

90 >10, so swap!

90 < 100, so stop!

So the add(e) method for a heap just involves adding one new leaf node, then doing a

single bubble up, which is \(O(\log n)\) time.

5 RemoveMax

Doing a peekMax() operation just means returning the root of the tree,

so that’s going to be \(O(1)\).

However, to remove the max you have to actually change the heap.

Insertion involved a bubble-up, so as you might guess removal requires a

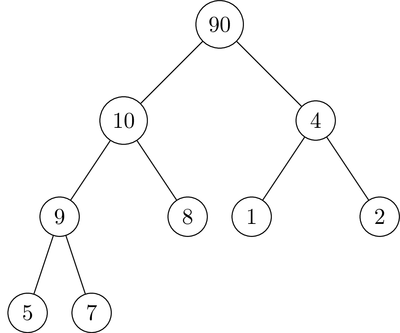

bubble-down. To do a removeMax(), you take the last element in the

tree (in our example, after the insertion of 90, this is 8), and put it

as the root. Then,

While the new element is smaller than at least one of its children:

Swap with the largest childHere’s the process. First, we put the 8 at the root.

Now, 8 < 90, so perform that swap.

Now, 8 < 9 AND 10, so perform a swap with the larger of the two.

And we’re finished!

How long did that take? Again, that took \(O(\log n)\) time.

6 Implementing a Heap

This doesn’t seem so hard to implement. We just take our familiar binary tree Node class, write some methods bubbleUp and bubbleDown, and be done with it! Of course, we’d have to somehow have a pointer to the first available open spot so we could do an insert, and a pointer to the last element, for a removeMax, so maybe it’s not easy as all that.

It turns out, though, that when working with a complete tree, it is unnecessary to actually make a Node class and store this as a tree. Instead, we’ll use the tree image to help us see what’s going on, while actually storing the priority/element pairs in an array. That’s right: heaps are actually trees, but we store the nodes in an array instead of using links.

The heap nodes are stored in a level-order traversal in the array.

That way, the root node is always first (index 0), and the “last”

element (which is swapped with the root for a removeMax) is always

last, at index size-1.

We don’t have explicit pointers between nodes. Instead, we use math on the array indices. The root is index 0, its children are indices 1 and 2, the children of 1 are at 3 and 4, the children of 2 are at 5 and 6, and so on. In general, for a node at index i, it is always the case that:

- The left child of i has index \(2i+1\)

- The right child of i has index \(2i+2\)

- The parent of i has index \(\lfloor (i-1)/2 \rfloor\).

To compute the parent index, remember in Java (and most programming

languages) that integer division

gives the floor already, so if i is an int, we can just compute the

parent index as (i-1)/2.

It would be stored in an array as:

[90, 10, 4, 9, 8, 1, 2, 5, 7]And you can see, for example, that the key 4 is at index 2 in this

array. So its parent (90) is at index (2-1)/2 = 0, its left child (1) is at

index 2*2+1=5, and its right child (2) is at index 2*2+2=6.

This is what makes heaps awesome and fast! In particular, the fact that we can implement heaps in an array greatly cuts down on their size in memory (since there are no pointers to worry about), and it’s the reason why the \(O(\log n)\) of a heap operation is a much “lighter” big-O than the \(O(\log n)\) of an AVL tree operation.

7 Heapify

Now, suppose we have an array full of elements and we want to create a brand new heap, filled with all these elements (note that we don’t always have this array ahead of time, so what we’re about to talk about won’t always be appropriate). How long does this take?

Here’s what you might think of doing:

heapify_first_try(array):

H = new empty heap

for each (element, priority) in array:

H.insert(priority, element)

return HWell since each insert() operation is \(O(\log n)\) time, and we do it n times, the total is \(O(n\log n)\).

But wait! Since we are storing the heap in an array, and we start out with an array, we can just do this operation “in place”! What you do is pretend that you have a heap (even though it’s totally out of heap-order), then call bubble-up or bubble-down on each element to put it in its proper place. If we do bubble-ups, this looks like:

heapify_second_try(array):

H = heap initialized with array (out of order)

for ii from 0 up to n-1:

H.bubbleUp(ii)

return HVery cool! This will certainly be a bit faster in practice. But unfortunately, it’s still \(O(n\log n)\) time, so not really asymptotically faster than before.

But wait! What if we did bubbleDowns instead of bubbleUps? That would mean starting from the bottom of the heap, but that’s fine since we have everything in an array. And this might be faster because most heap elements are “close” to the bottom levels and so don’t have very far to go in a bubbleDown operation!

heapify(array):

H = heap initialized with array (out of order)

for ii from n-1 down to 0:

H.bubbleDown(ii)

return HNow if you do our normal worst-case analysis, you multiply the n times through the loop, times \(O(\log n)\) for the worst-case cost of bubbleDown, for \(O(n\log n)\) total again.

But wait! Is that really how much it costs? Think about the last row of the heap. It contains about \(\tfrac{n}{2}\) items, and each one of them has nowhere to go for a bubbleDown. The next row up has about \(\tfrac{n}{4}\) items, each of which has to go down at most 1 level in a bubbleDown. The whole picture looks like this:

| Level | # of nodes in level | bubbleDown levels | Total |

|---|---|---|---|

| top row | 1 | lg n | lg n |

| 2nd row | 2 | lg n -1 | 2 (lg n - 1) |

| 3rd row | 4 | lg n - 2 | 4 (lg n -2) |

| … | |||

| 2nd to last | n/4 | 1 | n/4 |

| last | n/2 | 0 | 0 |

Now we have to add up the last column. Time for some math:

\[ \sum_{i=0}^{\lg n} i \frac{n}{2^{i+1}} = \frac{n}{2} \sum_{i=0}^{\lg n} \frac{i}{2^i} \le \frac{n}{2} \sum_{i=0}^\infty \frac{i}{2^i} \]

Well that infinite series converges to a finite number because of the Cauchy ratio test that you might have learned about in Calculus. In fact, the sum of that infinite series is 2. So the total is \(O(n)\).

HEAPIFYING IS THE SAME BIG-O AS JUST COPYING THE ARRAY!

Your mind has been blown. This is the first (and only) time in this class where our normal ways of multiplying the cost of the worst-case of each nested loop structure is correct but not tight - meaning this big-O is too big. It’s important to know that this can happen sometimes, even if you aren’t sure how to do this kind of analysis with summations on your own.

8 Heapsort

Suppose we want to put an array of numbers into increasing order (sort them). How would you do it? You would probably come up with something which is \(O(n^2)\). Could we use a heap to make this faster?

On the surface, heapsort is very easy: load everything into your heap, then pull it out, one by one. They’ll come back out in order. Loading everything in? If we do it bottom-up, it’s O(n). Taking everything out? O(n log(n)). So, total runtime O(n log(n)). But, it seemingly requires two arrays!

Consider the following sequence of numbers: 0 2 6 5 3 1 4. We’d like

to put these in increasing order. Let’s start by heapifying in place.

I’ll put a | in the array; everything to the right of it has been

heapified, everything to the left has not.

We start by putting the last \(\lfloor\frac{n+1}{2}\rfloor\)

things onto the bottom level of the

heap; this doesn’t require any work: 0 2 6|5 3 1 4. We then put the

next \(\lfloor\frac{n+2}{4}\rfloor\) things on the next level, and perform

bubble-downs on them:

0 2|6 5 3 1 40|5 6 2 3 1 4(2 has bubbled down)

Now there’s only one thing left to bubble down:

|6 5 0 2 3 1 4(0 swaps with its larger child, 6)|6 5 4 2 3 1 0(0 swaps with its larger child again, 4)

Great — we have completed the heapify phase and the data is completely organized into a max-heap.

Now, we can do removeMaxs. Here’s the first one. Now, the | will mean everything to the right is sorted, and everything to the left is still in the heap.

6 5 4 2 3 1 0|0 5 4 2 3 1|6(swapped the last thing with the top thing)5 0 4 2 3 1|6(0 has bubbled down)5 3 4 2 0 1|6(0 has bubbled down again)

Do another removeMax, again swapping the top thing for the last thing:

5 3 4 2 0 1|61 3 4 2 0|5 6Swap the 1 and the 5)4 3 1 2 0|5 6(1 has bubbled down)

Again:

4 3 1 2 0|5 60 3 1 2|4 5 6(swap the 4 and the 0)3 0 1 2|4 5 6(0 has bubbled down)3 2 1 0|4 5 6(0 has bubbled down)

Again:

3 2 1 0|4 5 60 2 1|3 4 5 6(swap the 0 and the 3)2 0 1|3 4 5 6(0 has bubbled down)

Again:

2 0 1|3 4 5 61 0|2 3 4 5 6(swap the 1 and the 2)

Again:

1 0|2 3 4 5 60 1 2 3 4 5 6(swap, and we’re sorted)

\(O(n \log n)\), in place! It turns out this is the absolute best we can do in comparison-based sort.

By the way, the typical \(O(n^2)\) sort, the algorithm most people come up with on their own, is called bubble sort. Don’t use it! President Obama would be disappointed.