Unit 1: Big-O

1 What is a Data Structure?

Most of you carry around (the same) large black backpack all day. Think about what’s in there, and where it is. Your decisions on what to put in there and where to put it all were governed by a few tradeoffs. Are commonly-used things easy to reach? Is the backpack small and easy to carry? Is it easy to find everything in there? Do you really need all that stuff every day?

Organizing data in a computer is no different; we have to be able to make decisions on how to store data based on understanding these tradeoffs. How much space does this organization take? Is the operation I want to perform fast? Can another programmer understand what the heck is going on here?

It will be very rare that any given problem has one best answer; instead, different applications will require you to prefer a solution that makes aspect A (speed, for example) best, while other times you’ll prefer a solution that makes aspect B (space, maybe) better.

As a result, we have to start with intelligent ways of discussing these tradeoffs. Which means, we have to start with…

2 Algorithm Analysis

In order to measure how well we store our data, we have to measure the effect that data storage has on our algorithms. So, how do we know our algorithm is any good? First off, because it works - it computes what we want it to compute, for all (or nearly all) cases. At some level getting working algorithms is called programming, and at another it’s an abstract formal topic called program correctness. But what we mostly will talk about here is if we have two algorithms, which is better?

So, why should we prefer one algorithm over another? As we talked about, this could be speed, memory usage, or ease of implementation. All three are important, but we’re going to focus on the first two in this course, because they are the easiest to measure. We’ll start off talking about time because it is easier to explain - once you get that, the same applies to space. Note that despite our focus on time, sometimes (often) space is the bigger concern.

So, we want the algorithm that takes less time. As soon as we talk about “more” or “less”, we need to count something. What should we count? Seconds (or minutes) seems an obvious choice, but that’s problematic. Real time varies upon conditions (like the computer, or the specific implementation, or what else the computer is working on).

For example, suppose it’s very important you compute the solution to the following equation:

\[f(n,x) = \sum_{k=1}^{n}\frac{x^8}{k!}\]

Here are two entirely reasonable ways of programming this same thing. The first:

public double f_alg1(double x, int n) {

double x8 = 1.0; // Precompute x^8

for(int i = 0; i < 8; i++)

x8 = x8*x;

double sum = 0.0;

for(int k = 1; k <= n; k++) { // For each value of k,

double factorial = 1.0; // compute k!

for(int i = 1; i <= k; i++)

factorial = factorial*i;

sum = sum+ x8/factorial; // Add up the factorials

}

return sum;

}and the second:

public double f_alg2(double x, int n) {

double x2 = x*x; // Compute x^8

double x4 = x2*x2;

double x8 = x4*x4;

double sum = 0.0;

double factorial = 1.0;

for(int k = 1; k <= n; k++) {

factorial = factorial*k; // Compute k!

sum = sum + x8/factorial; // Add next term

}

return sum;

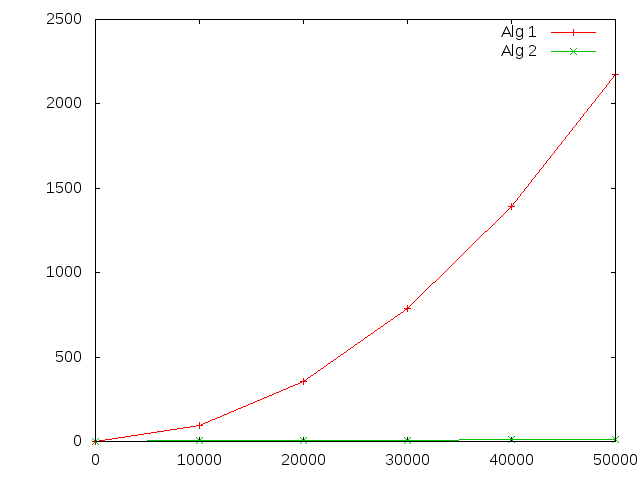

}We can use Java’s System.currentTimeMillis() which returns the current clock time in milliseconds to time these two functions. Here are the running times for various values of n:

n |

f_alg1 |

f_alg2 |

|---|---|---|

| 0 | 0 | 0 |

| 1000 | 92 | 3 |

| 2000 | 353 | 6 |

| 3000 | 788 | 7 |

| 4000 | 1394 | 10 |

| 5000 | 2172 | 12 |

Both get slower as n increases, but algorithm 1 gets worse faster. Why does algorithm 1 suck?

What happens if we run one of them on a different computer? Will we get the same times? Probably not — processor speed, operating system, memory, and a host of other things impact how much time it takes to execute a task.

What we need, then, is a machine- and scenario-independent way to describe how much work it takes to run an algorithm. One thing that doesn’t change from computer to computer is the number of basic steps in the algorithm (this is a big hand-wave, but generally true), so let’s measure steps.

We are going to vaguely describe a “step” as a primitive operation, such as:

- assignment

- calling a function or method

- arithmetic operation like

+or* - comparison

- branching (in an if statement or a loop)

- indexing into an array

- dereferencing a pointer

- returning

It is important to note that this has a great degree of flexibility: one person’s basic step is another person’s multiple steps. To an electrical engineer, taking the not of a boolean is one step because it involves one bit. Adding two 32-bit numbers is 32 (or more!) steps. Fortunately, we’re not going to be counting quite like this for long, so we can whistle past these kinds of details.

So, when we determine how long an algorithm takes, we count the number of steps. More steps == more work == slower algorithm, regardless of the machine.

What does the following code do, and how many steps does it take?

max = a[0]; // 2 steps: array index and assignment

i = 1; // 1 step: assignment

while (i < n) // 2: comparison and branch

if (a[i] > max) // 2: array and comparison

max = a[i]; // 2, but it doesn't always happen!

i++; // 2: addition and assignment

return max; // 1: returnAdd this up and we get 12 steps. But wait, that’s not right. We didn’t account for how many times through the loop, which will make a big big difference! In this case, the loop condition will be evaluated \(n\) times, when \(i=1,2,\ldots,n\). The last time, when \(i=n\), the condition is false, so the loop body is only executed \(n-1\) times. Therefore the total number of steps is:

\[2 + 1 + 2n + (n-1)\cdot(4\text{ or } 6) + 1.\]

We’re still not quite done - the formula we just wrote has an ambiguity which comes from the if statement in the code. This corresponds to the fact that this code will sometimes run a little bit faster or slower. For example, if the array is in descending order, the code within the if statement is never run at all. If it is in ascending order, it will be run every time through the loop. We call these “best case” and “worst case” run times, respectively. We can also think about the average case.

Specifically, in the best case, when the array is in descending order, the inner if statement is always false, and we have 4 steps inside each loop body. So the total number of steps simplifies to \(6n\). In the worst case, the “4 or 6” becomes 6, and it simplifies to \(8n-2\).

The best case is usually way too optimistic (most algorithms have best case times that are far away from average). Average case is often hard, because we need probabilistic analysis (what’s the probability of needing the if statement?). In many cases, a worst case bound is a good estimate. That way we can always say, “no matter what, this program will take no more than this many steps.” For the program above, that count is \(8n-2\).

2.1 Comparing algorithms with bounds

In the program above, the number of steps it takes depends on the size of the array, the n. What we really want is a function that takes the size of the array as input, and returns the number of steps as the output. Note that is what we developed above.

\[f(n) = 2 + 1 + 2n + (n - 1)\cdot 6 + 1 = 8n-2\]

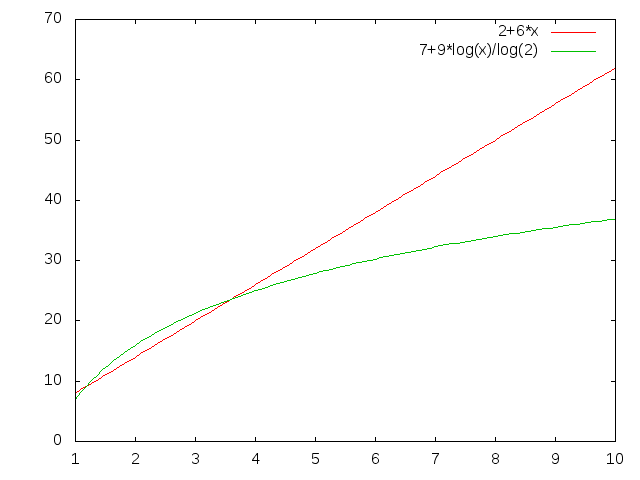

So when we’re comparing algorithms, we don’t compare numbers, but functions. But how do we compare functions? Which function below is “bigger”?

Well, sometimes one, and sometimes the other. So what we’ll do is talk about one function dominating the other. We’ll say that one function dominates another if it is always bigger than the other for every x value (for example \(f(x) = x^2\) dominates \(f(x)=-x^2\)). Neither of the functions in the graph above dominates the other.

One function can dominate another in a particular range as well. \(f(x)=1\) dominates \(f(x) = x^2\) in the range \([0;1]\).

Now, when input sizes are small, everything is fast. Differences in run time are most relevant when there is a lot of data to process. So, we colloquially ask “When n is large, does one dominate the other?” Mathematically, we ask if there exists an \(n_0\geq 1\) such that for all \(n\ge n_0\), \(f(n) \gt g(n)\) (or \(g(n) \gt f(n)\)).

In this graph, \(f(x)=3x^2-8\) dominates \(f(x)=x^2\) everywhere to the right of \(x=2\). So \(n_0=2\) - we can call this the “crossover point”.

2.2 How can we tell if one will dominate the other for large n?

This question is all about growth rate, and for many formulas it’s a lot easier to figure out than you might think. Take a look at this graph of \(f(n)= n^2\) vs. \(g(n) = 3n+4\).

What happens if we change the constants in \(g(n)\)? The line moves, but it doesn’t change shape, so there’s always some point at which f(n) passes it. Here for example is \(f(n)= n^2\) vs. \(g(n) = 30n+40\).

As soon as we start caring about really big n, it doesn’t matter what the constant coefficients are, \(f(x)\) will always find a point at which it will dominate \(g(x)\) everywhere to the right. In the following table, \(f(n)\) will dominate \(g(n)\) for some large enough \(n\):

| \(f(n)\) | \(g(n)\) |

|---|---|

| \(n^2\) | \(n\) |

| \(n^2-n\) | \(5n\) |

| \(\frac{1}{10^{10}}n^2-10^{10}n-10^{30}\) | \(10^{10} n + 10^{300}\) |

2.3 Big-O

We talk about the runtime of algorithms by categorizing them into sets of functions, based entirely on their largest growth rate.

The mathematical definition: if a function \(f(n)\) is eventually dominated by some other function \(c\cdot g(n)\), where \(c\) is any positive coefficient, then \(f(n)\) in a set called \(O(g(n))\), or “Big-Oh of \(g(n)\)”. Mathematically, we write this as \(f(n) \in O(g(n))\).

Since the constant coefficients don’t matter we say that \(g(n)\) eventually dominates \(f(n)\) if there is ANY constant we can multiply by \(g(n)\) so that it eventually dominates \(f(n)\). \(f(n)=5n+3\) is obviously \(O(n^2)\). It is also \(O(n)\), since \(f(n)\) is dominated by \(10n\), and 10 is a constant.

The formal definition: For a function \(f(n)\), if there exist two constants \(n_0\) and \(c>0\) such that, for all \(n \ge n_0, f(n) \le c \cdot g(n)\), then \(f(n) \in O(g(n))\).

While \(f(n)=5n+10 \in O(n^2)\), it is also \(O(5n)\). \(O(5n)\) is preferred over \(O(n^2)\) because it is closer to \(f(n)\). \(f(n)\) is ALSO \(\in O(n)\). \((O(n)\) is preferred over \(O(5n)\) because the constant coefficients don’t matter, so we leave them off.

Two big takeaways here to remember:

- In common usage, the function \(f(n)\) is the thing we are trying to measure, like the number of steps some algorithm takes in the worst case.

- The function \(g(n)\) is almost always some very simple function like \(n\) or \(n^2\) or even \(1\).

- We always want the tightest big-O possible, which means we want the right-hand side \(g(n)\) to be the smallest thing we can get without being false.

2.4 Now we know Big-O

We’re a week in, and you’ve already learned the most important part of this class: Big-O notation. You absolutely have to understand this to be a computer scientist. Here are some examples of Big-O notation appearing in academic papers by your faculty:

“Since the program doubles at each step, the size of \(\Psi_n\) is \(O(2^n)\).” – Chris Brown and James Davenport, “The Complexity of Quantifier Elimination and Cylindrical Algebraic Decomposition.”

“The initialization in Steps 2–5 and the additions in Steps 10 and 12 all have cost bounded by \(O(n/r)\), and hence do not dominate the complexity.”

– Dan Roche, “Chunky and Equal-Spaced Polynomial Multiplication”

“Unfortunately, the expressiveness of the model must be weighed against the \(O(n^3)\) cost of inverting the kernel matrix.”

– Gavin Taylor, “Feature Selection for Value Function Approximation.”

“Our P-Ring router, called Hierarchical Ring (HR), is highly fault tolerant, and a rounder of order \(d\) provides guaranteed \(O(log_d P + m)\) range search performance in a stable system with P peers, where m is the number of peers with items in the query range.”

– Adina Crainiceanu, et al., “Load Balancing and Range Queries in P2P Systems Using P-Ring.”

2.5 Comparing Two Algorithms with Big-O

We now have the language and a basic understanding of how to compare two algorithms, let’s put it to the test. Suppose we have a sorted array of integers arr, and a single integer needle that we are searching for, and we want to find the index of that integer in the sorted array.

int find1(int[] arr, int needle) {

for (int i=0; i<arr.length; i++) {

if (arr[i] == needle) return i;

}

return -1; // flag for "didn't find it"

// depending on documentation, maybe an exception is better

}

int find2(int[] arr, int needle) {

int lo = 0;

int hi = arr.length;

while (lo < hi) {

int mid = (lo + hi) / 2;

if (needle > arr[mid])

lo = mid + 1;

else

hi = mid;

}

if (lo < arr.length && arr[lo] == needle)

return lo;

else

return -1; //flag for "didn't find it"

//depending on documentation, maybe an exception is better

}

The question is, which is faster? You might say that find1() should be faster because it has less code. Or, you might say, we can only know if we run the routines, but we now know a better way with big-O.

The first thing to remember is that we are concerned with the worst-case performance, which is saying that we can’t assume that the algorithm “gets lucky” and, for example, finds the needle in the first index of the array.

In the worst case analysis, at least for find1(), let’s be verbose at first to identify the total number of steps involved. They would be:

- Initialize the variable

i, one step - Loop condition

i<arr.length, 3 steps (length lookup, comparison, branch)- If condition

arr[i] == needle, 3 steps (array lookup, comparison, branch)

- If condition

- A return (one step) either in the loop or outside

Remember the first thing we have to figure out is how many iterations of the loop(s). In this case, we will get \(i=0, 1, 2,\ldots\), ending with i==arr.length when the condition becomes false (in the worst case). If we write n for the length of the array, then the loop condition happens \(n+1\) times and the loop body runs \(n\) times. So the total number of steps is

\[1 + (n+1)\cdot 3 + n\cdot{}3 + 1 = 6n + 5.\]

From our big-O definition above, this is \(O(n)\).

To emphasize: the precise way of counting steps doesn’t matter that much. Some might argue, for example, that the lookup arr[i] should really be considered 3 steps instead of 1, because internally your processor has to multiply i times the size of each memory cell (usually a left shift), then add this offset to the array base pointer, and finally load that value from memory. But there is really no point in arguing about this because it will result in the exact same big-O \(O(n)\) at the end. In fact, the ability to gloss over these kind of low-level details is one of the big benefits and reasons why we use Big-O notation in the first place.

Turning to find2(), let’s now reason about the total number of comparisons this routine makes. The procedure is different here because instead of searching from front to back we are leveraging the fact that the array is sorted and making our first comparison in the middle. The specific algorithm being employed is called binary search and you have probably seen it before in some other class.

We can do a similar line-by-line analysis of the steps for find2(), but the important question of how many times does the loop run becomes much trickier. Here, we don’t have a single index i counting up from 0 to n, but instead there are two indices called lo and hi. Each time through the loop, one of two things happens: either lo gets bigger, or hi gets smaller. But we can’t say in general which one of those two things happens — it depends on what needle is and what numbers are in the array!

The trick to doing this particular analysis is to instead consider the quantity hi-lo, which is essentially the part of the array we are still searching through. Initially hi-lo equals n, the length of the array, and the loop will stop when hi-lo is less than 1. Furthermore, you can check that, no matter whether lo increases to (mid+1) or hi decreases to mid, the difference hi-lo always decreases by half to at most (hi-lo)/2.

So in summary, this loop is controlled by 3 things:

- Starting condition:

hi-loequals n - Update:

hi-logets divided by 2 - Ending condition:

hi-lois less than 1

Now back to our original question, which was how many times will the loop run for the find2() algorithm? We can see that this is the same as asking, how many times do I need to divide n by 2 before it gets less than 1? Mathematically, we are saying what is the smallest value of x such that

\[\frac{n}{2^x} < 1\]

Solving the inequality above for x means multiplying both sides by \(2^x\) and then taking logarithms, to get \(x > \log_2 n\). In other words, after \(\log_2 n\) iterations, we are guaranteed that hi-lo < 1, and the loop will terminate. Because all of the lines inside the loop are only 1, 2, or 3 steps, the total cost of find2() is \(O(\log_2 n)\).

A quick note about logs: we usually do not write the base of the logarithm in big-O notation. This is because, as you may recall,

\[\log_2 n = \frac{\log n}{\log 2},\]

where the logarithms on the right-hand side are any base. Because the difference is only a factor of \(\frac{1}{\log 2}\), which is a constant, the log to any base is the same — in big-O notation — as the same as the log to any other base. So in terms of big-O, we just write \(O(\log n)\).

With all of that analysis done, we have find1() is \(O(n)\) and find2() is \(O(\log(n))\), so we can now say that find2() should be faster, and it is!

2.6 Our Main Big-O Buckets and Terminology

As we just saw, big-O is extremely useful because it allows us to put algorithms into buckets, where everything in that bucket requires about the same amount of work. It also allows us to arrange these buckets in terms of performance.

First, though, it’s important that we use the correct terminology for these buckets, so we know what we’re talking about. In increasing order…

- Constant time: The run time of these algorithms does not depend on the input size at all. \(O(1)\).

- Logarithmic time: \(O(\log(n))\). Remember, changing the base of the log is exactly the same as multiplying by a coefficient, so we don’t tend to care too much if it’s natural logarithm, base-2 logarithm, base-10 logarithm, or whatever.

- Linear time: \(O(n)\).

- n-log-n time: \(O(n \log(n))\).

- Quadratic time: \(O(n^2)\).

- Cubic time: \(O(n^3)\).

- Polynomial time: \(O(n^k)\), for some constant \(k\gt 0\).

- Exponential time: \(O(b^n)\), for some constant \(b \gt 1\). Note this is different than polynomial!

Fuzzily speaking, things towards the top of this list are fast, and things towards the bottom are slow. To some computer scientists, everything that’s polynomial-time or faster is “fast”, whereas to others, maybe only linear-time or faster is fast enough. In IC312, we will mostly focus on the range from logarithmic time to quadratic time.

The following table compares each of these buckets with each other, for increasing values of \(n\).

| \(n\) | \(\log(n)\) | \(n\) | \(n\log(n)\) | \(n^2\) | \(n^3\) | \(2^n\) |

|---|---|---|---|---|---|---|

| 4 | 2 | 4 | 8 | 16 | 64 | 16 |

| 8 | 3 | 8 | 24 | 64 | 512 | 256 |

| 16 | 4 | 16 | 64 | 256 | 4096 | 65536 |

| 32 | 5 | 32 | 160 | 1024 | 32768 | 4294967296 |

| 64 | 6 | 64 | 384 | 4096 | 262144 | 1.8x10^19 |

| 128 | 7 | 128 | 896 | 16384 | 2097152 | 3.4x10^38 |

| 256 | 8 | 256 | 2048 | 65536 | 16777216 | 1.2x10^77 |

| 512 | 9 | 512 | 4608 | 262144 | 134217728 | 1.3x10^154 |

| 1024 | 10 | 1024 | 10240 | 1048576 | 1073741824 | 1.8x10^308 |

To understand just how painful exponential time algorithms are, note that the number of seconds in the age of the universe has about 18 digits, and the number of atoms in the universe is a number with about 81 digits. That last number in the table has 309 digits. If we could somehow turn every atom in the universe into a little computer, and run all those computers in parallel from the beginning of time, an algorithm that takes \(2^n\) steps would still not complete a problem of size \(n=500\).

2.7 Other Big-Stuff

Through this entire section, we are still talking about worst-case run times.

Remember, if \(f(n)\in O(g(n))\), then we can come up with \(n_0\) and \(c>0\) such that for all \(n \ge n_0\), \(f(n)\leq c\cdot g(n)\).

If we replace the second part of that definition with a greater-than like \(f(n) \ge c \cdot g(n)\), then \(f(n)\in \Omega (g(n))\). That is read, “big-Omega” of \(g(n)\) and we can think of this as a lower bound, meaning, for an algorithm, it can’t essentially be faster than the \(g(n)\).

Proving a Big-O and a Big-\(\Omega\) for some new algorithm is a task for Algorithms class. But, if you can prove that some algorithm’s (best- or worst- or whatever-) case takes at least this much work (Big-\(\Omega\)), and no more than that much work (Big-O), and the Big-\(\Omega\) and Big-O are the same, then you’ve proven that algorithm’s Big-\(\Theta\).

Obviously, Big-\(\Theta\) is most precise “Big” notation, but it’s common in colloquial use to use Big-O in its place. In this class, we’ll mostly talk big-O since we’re not doing proofs anyway. If you take algorithms, that will mostly be big-\(\Theta\).

3 Analyzing Selection Sort

Let’s consider one more routine for analysis, selection sort, which you might remember from your intro to computing class.

void selectionSort(int[] arr) {

for (int i=0; i < arr.length-1; i++) {

for (int j = i+1; j < arr.length; j++) {

if (arr[i] > arr[j]) {

int temp = arr[i]; // swap arr[i] and arr[j]

arr[i] = arr[j];

arr[j] = temp;

}

}

}

}To analyze something with nested control structures like this, we need to work our way from the inside out. Starting with the innermost part:

if (arr[i] > arr[j]) {

int temp = arr[i]; // swap arr[i] and arr[j]

arr[i] = arr[j];

arr[j] = temp;

}Evaluating the condition is 3 steps, plus the branch. Then the inside of the block — to swap two array elements — is 5 steps. Now before you ask and possibly argue about these numbers (3 and 5), don’t worry about it!! We are concerned with the Big-Oh here, which is \(O(1)\) in any case. That is to say, this if statement takes a constant number of steps to evaluate in the worst case. Done!

Now let’s move out one layer:

for (int j = i+1; j < arr.length; j++) {

// O(1) inner part

}How many iterations does this loop make? Well, the index j goes from i+1 up to n, incrementing by 1 every time, so the loop runs exactly \(n - (i+1) = n-i-1\) times. Hmm, this is a bit strange, as it depends not just on \(n\) but also on \(i\). Looking at the definition of i in the outer loop, we see that it is always at least 0, which means \(n-i-1 \le n-1\). So we can say this loop needs at most \(n-1\) iterations, which is \(O(n)\). Multiplying with the \(O(1)\) inner part we already figured out, this is \(O(n)\) so far.

Now we can move out to the outer loop!

for (int i=0; i < arr.length-1; i++) {

// O(n) inner part

}This time the inner loop goes exactly \(n-1\) times, which is \(O(n)\). So \(O(n)\) iterations of the inner part, times \(O(n)\) cost per iteration at most, gives \(O(n^2)\) runtime total. SelectionSort is a quadratic-time algorithm.

Now, you may object that we were a little “loose” here. The inner loop doesn’t always take \(n-1\) iterations. In fact, if we add up the number of iterations of the inner loop times the outer loop, it’s not exactly \((n-1)^2\) but more precisely

\[(n-1) + (n-2) + (n-3) + \cdots + 3 + 2 + 1\]

This is an arithmetic series which you will (or have already) learn about in your discrete math class. It comes out to \(\frac{n^2-n}{2}\), which — surprise, surprise — is \(O(n^2)\). So we weren’t over-estimating after all!

Here are the main takeaways here:

- Always work from the inside-out with nested loops and if/then structures.

- Simplify to big-Oh along the way to make your life easier.

- When analyzing nested loops, you need to multiply the costs of those nested structures. (Think about why this is — we are repeating the inner loop many times every time the outer loop runs.)

- To get a big-Oh estimate, we can take upper bounds when needed. So like when analyzing the inner loop here, we simplified \(n-i-1\) to just \(n-1\) as an upper bound.

- When taking upper bounds, your total big-Oh will always be correct, bit it might not be the tightest possible big-Oh. But to really prove we have the tightest possible big-Oh, it might require some more tricky math like summations.

This was a worst-case analysis of selection sort. What about the best-case analysis? Could we get “lucky” here?

In fact, no. Maybe the inner if condition could be false every time (if you started with a pre-sorted list), but as we saw in the analysis, the worst-case big-Oh depends on the loops, not on whether the inner if statement is true or false. So even if the list is pre-sorted in the best case, the running time of selection sort is still \(O(n^2)\).

4 Analyzing Arrays and Linked Lists

We are already quite comfortable with two types of data structures: arrays, and linked lists (we also know array lists, but those require some more complicated analysis). Let’s apply our analysis skills to the operations we commonly do with these data structures.

4.1 Access

“Access” means getting at data stored in that data structure. For example, getting the third element.

In an array, this is really easy! Does the time needed to evaluate theArray[i] depend on the size of the array, or even on i? No! So, it’s constant time. \(O(1)\).

In a Linked List, this is harder. Now, if we were looking for the first element, or the third element, or the hundredth element, then that does NOT depend upon the size of the list, and that would be \(O(1)\). However, remember that we are interested in worst case, and if we think about this as a game where an adversary gets to choose which element to make our algorithm the slowest, then what would he choose?

You might think that the adversary should choose the last element, but that could be O(1) because many linked list are doubly linked with a reference to the head and tail of the list and pointers between nodes pointing forward and backwards. Then the worst-case element to reference is right in the middle, which is furthest from the head and tail references requiring at least \(n/2\) pointer follows to be reached. Thus, access for Linked Lists is \(O(n)\).

(As an aside, you might be saying, why not just store a reference to the middle item too to remove the worst case? Then, the worst case shifts to the middle of the two halves, requiring \(n/4\) pointer follows, and we are back at \(O(n)\). Taking this one step further, you might just say, why not just store two more references to the two node in the middle of the two halves, but then the worst case shifts again to be the middle of any of the four segments leaving us with \(n/8\) and still \(O(n)\). You can keep doing this, adding references ad infinitum, but then eventually you have \(n/2\) references to keep track of, and how are you going to organize those? And if your answer is “recursively”, then congratulations, you have invented the skip list.)

4.2 Adding an Element to the Front or Back

In an array, this is hard! Because we now have an additional element to store, we have to make our array bigger, requiring us to build a new array of size \(n+1\), move every element into it, and add the new one. That “move every element into it,” will take \(O(n)\) time.

In a linked list, this is really easy! If we have a pointer to the first and last link in the chain, this takes the same amount of time, no matter how long the list is! \(O(1)\).

It’s worth noting that removing an element requires a similar process.

4.3 Adding an Element to a Sorted List or Array

The scenario: assume the array or list is in sorted order, and we have a new element to add. How long will it take (as always, in the worst case)?

This is a two-step process: (1) find where the element goes, and (2) add the element.

In arrays, we recently saw two ways to do (1); one where we cycled through the entire array looking for the proper location (\(O(n)\)), and binary search where we repeatedly halved the array until we found the spot (\(O(\log n)\)). Doing step (2) is very similar to what we had to do for adding an element to the front or back, so that step is \(O(n)\). So, in total, this takes us \(O(log(n)) + O(n)\), which is \(O(n)\).

Alternatively, you could combine the search with the addition and do both at once: Go from element to element, front to back until you find where the new one fits in sorted order, moving elements to the new array as you go. Once you find the location to add your new element, add it to the new array, and continue moving elements. This gives you two linear-time loops, one after the other (not nested!), for a total cost of \(O(n)\).

In a linked list, doing step (1) is \(O(n)\) (see why?). Adding the element at that point is \(O(1)\). So, in total, \(O(n)\).

In summary, it’s \(O(n)\) for either an array or a linked list. But there’s a big difference in how this breaks down. For arrays, the \(O(n)\) really comes from the “insertion” part, whereas for linked list the insertion part is easy and the \(O(n)\) comes from the “search” part. This difference will come into play in some applications later on.

4.4 Traversal

Traversal is our word for “visiting every element.” It should be clear that for both arrays and linked lists, this is \(O(n)\).

4.5 Array and Linked List summary

The executive summary is as follows:

| Array | Linked List | |

|---|---|---|

| Access | \(O(1)\) | \(O(n)\) |

| Insertion/Remove from front/back | \(O(n)\) | \(O(1)\) |

| Sorted insertion/remove | \(O(n)\) | \(O(n)\) |

| Traversal | \(O(n)\) | \(O(n)\) |

We’ll be doing this all semester, for each of our data structures. As you can see, the choice between arrays and linked lists, comes down to what you’re doing with them. Doing lots of access? Use an array. Adding and removing elements often? Use a linked list. This give-and-take is at the center of Data Structures.